Introduction

Every iOS application is associated with atleast one thread on the app launch. This is called the Main Thread. All the UI activities must be performed on this main thread.

Have you ever heard of the statements like –

Please perform this operation on the main threadPerform this expensive operation on the background thread and do not block the main threadThis causes a Deadlock/ This causes a Race Condition

If yes, then you are dealing with multithreading.

Before going into deep dive on the multithreading concepts, let us get some brief understanding on few of the concepts that we come across in multithreading.

- CPU Clock Speed: The rate at which CPU can execute the instructions. Clock speed is measured in cycles per second. A CPU with 1 cycle per second has a clock speed of 1 hertz.

Similarly a CPU with a clock speed of 2Ghz has 2 billion cycles per second.

CPU can only execute one instruction at a time. With every tick of a clock, CPU will execute one instruction. - Single Core machine: A CPU that offers single core is called a single core machine

- Multi Core machine: A CPU that offers multiple cores is called a multi core machine

- Single core vs Multi core CPU: A dual core processor will fetch and execute the instructions twice as faster as a single core processor in the same amount of time. A quad core processor will fetch and execute the instructions even more faster at the same time.

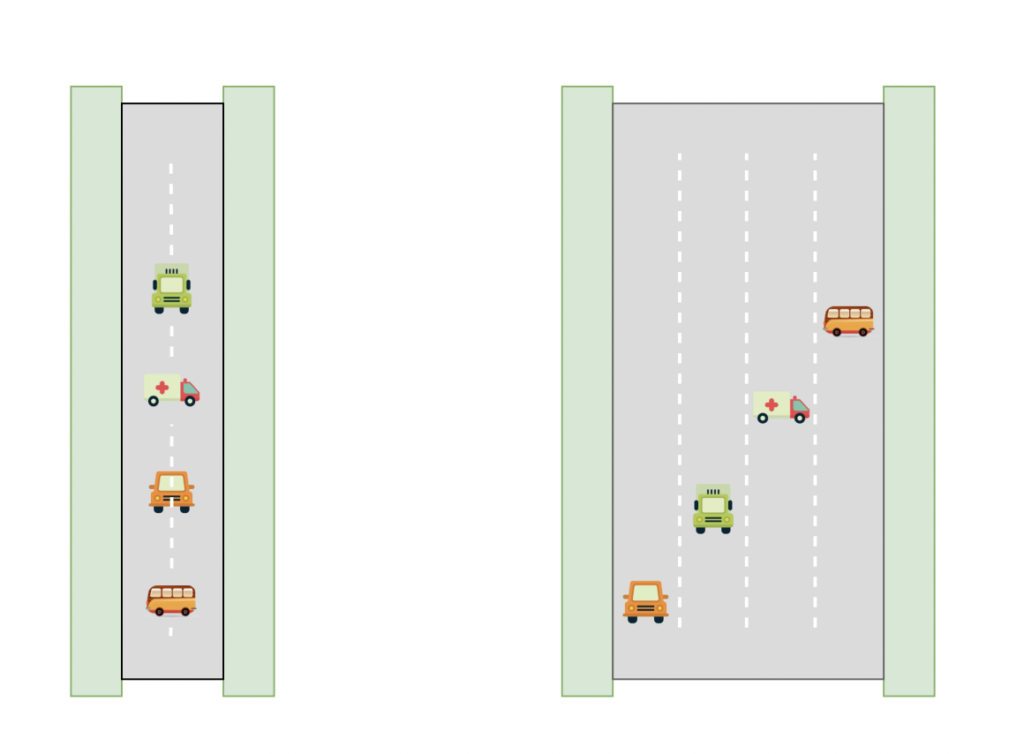

In the above image, we could see that in a single lane road all the vehicles travel in a line one after another. Where as in a four lane road, vehicles travel simultaneously.

With the same analogy, in a multi core processor instructions will be processed faster when compared to the single core processor in a given amount of time.

- Thread: A thread is a sequence of instructions that can be executed by a runtime.

- Multithreading: When an app uses more than one thread, we can say it is multithreaded.

- Concurrency: Concurrency is the notion of executing multiple tasks at the same time. concurrency can be achieved on a single core processor also with the help of context switching. Parallelism can only be achieved on multi core processors where multiple threads run simultaneously at the same time on different cores.

Why do we need Multithreading

As we know now a days, most of the devices(smart phones, laptops, etc) come with multi core CPU. To make efficient use of multiple cores, we need multithreading.

In iOS multithreading can be achieved in multiple ways.

- Create and manage threads manually

- Grand Central Dispatch (GCD)

- Operation Queues

1. Create threads manually

Threads can be created manually. Developers are responsible for creating a new thread, manage them carefully and deallocating them from memory once they have finished executing. Creating and managing a thread manually is a tedious task.

2. Grand Central Dispatch

GCD is a low level API for managing concurrent operations. Apple introduced GCD to manage concurrency in an easier way. There is no overhead on the developers to create and manage threads manually. System will take care of creating and managing the threads.

There are three types of queues supported by GCD.

- Main Queue(serial queue)

- Global Queue(concurrent queue)

- Custom Queue(can be either a serial or concurrent queue)

What is a Queue?

A Queue in general is similar to a queue that we see in a grocery store. A person standing first in the queue will exit first from the queue. Queue works in First In First Out(FIFO) order.

A software queue works in the same way. A queue always executes the tasks in the order it has added to the queue.

A queue can execute the tasks synchronously or asynchronously. A queue can also be a serial queue or a concurrent queue.

A serial queue is the one which has only one thread. It performs the tasks one after another. This is like a single lane road we saw above where vehicles travel in a line one after another. The Main Queue is a serial queue. The main advantage of the serial queue is the predictability as the tasks will be finished in the order it has added to the queue. But the disadvantage is the reduced performance.

A concurrent queue is the one which has multiple threads associated with it. This is like a four lane road we saw above where multiple tasks can run simultaneously on different threads. The Global Queues are concurrent queue. Advantage of using the concurrent queues is the increased performance as tasks are executed simultaneously on different threads. Disadvantage is that the completion of tasks cannot be predicted. It is possible that the task running on the second thread can finish before the task running on the first thread.

Synchronous execution: A synchronous function returns control to the caller after the task is completed.

Asynchronous execution: An asynchronous function returns immediately. Control will not wait for the completion of the task in the queue. Thus, an asynchronous function does not block the current thread.

We can dispatch the tasks either synchronously or asynchronously to either a serial queue or a concurrent queue. Dispatching a task synchronously or asynchronously is all about how the task is started by the queue. Synchronous execution will block the other tasks until the current task is completed. Where as asynchronous execution will start the task and not wait for the task to be completed. It will start next task in the queue.

Serial and concurrent execution is all about how the tasks are ended.

Task execution in a Serial queue: A serial queue always performs the tasks one after another. We are always sure that the task which is first in the queue will be finished first.

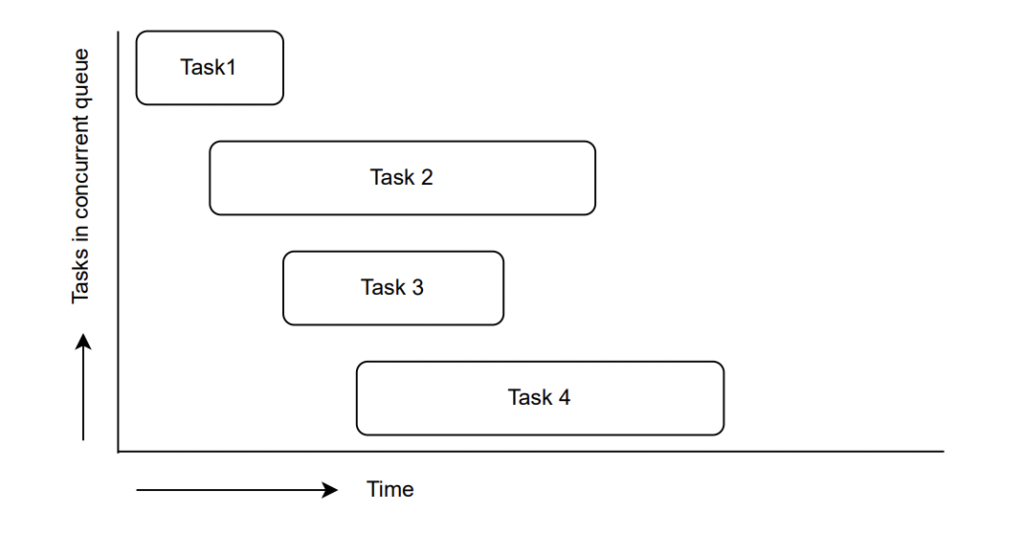

Task execution in a Concurrent Queue: In contrast, a concurrent queue do not guarantee the order of completion of the tasks in the same order it has added to the queue. In a concurrent queue, task 2 which is added after task 1 might finish earlier than task 1. Similarly, a task 3 that is added to the queue after task 2 can be finished before task 2 is finished.

3. Operation Queue

An operation queue is a high level abstraction on GCD. Operation queues can also be used to perform concurrency in iOS.

Operation Queues provides more control over the threads. It is a wrapper over GCD which provides additional features like dependencies between the tasks, pause, resume and cancel the tasks etc.